Agent-based content creation is no longer experimental. In 2026, AI agents research topics, write drafts, optimize for SEO, repurpose content across platforms, and even publish autonomously. For agencies and content teams under pressure to scale, this sounds like the final solution.

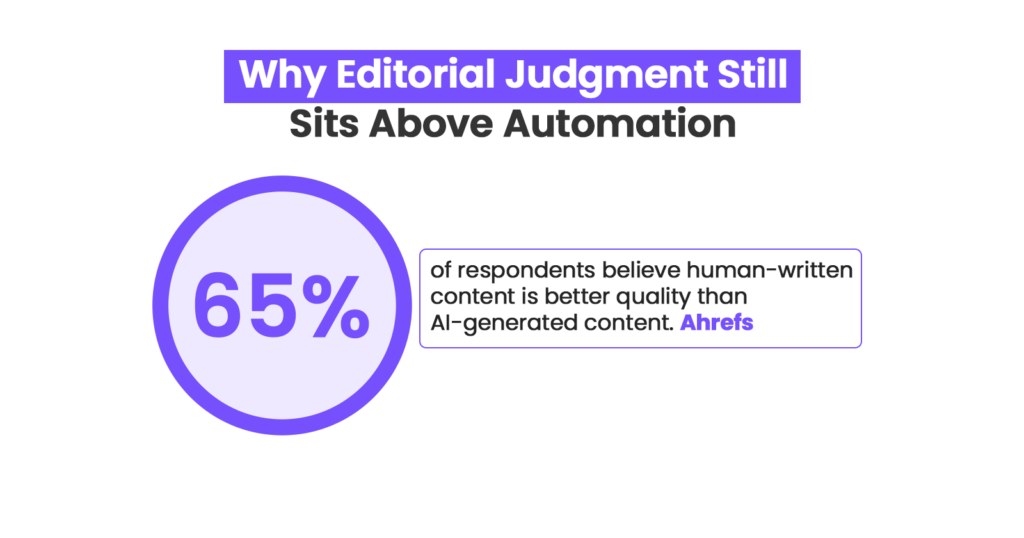

But here’s the reality: most agent-generated content still fails at the last mile. According to industry surveys, only about 17% of B2B marketers rate the quality of content created by AI alone as “excellent or very good”. And most teams still review or edit AI drafts before publishing because of quality and alignment issues. (Entrepreneurs HQ)

It ranks sometimes, converts occasionally, and sounds acceptable, yet rarely feels authoritative, persuasive, or memorable. That gap isn’t about technology. It’s about judgment.

Human editors are still critical, not because AI is weak, but because content quality depends on taste, context, alignment, and accountability. This article breaks down exactly why agent-based content still needs human editors, where agents fail structurally, and how modern teams balance automation with editorial control to win.

Quick Summary

TL;DR: AI can scale content fast, but quality still fails without human oversight. Teams succeed when editorial judgment is embedded, not optional. Hybrid workflows, where AI handles volume and editors enforce taste, context, and brand voice deliver content that ranks, converts, and resonates. Platforms like ShortVids make this operational at scale. #tldr

- Core Components: AI-Generated Drafts → Human Editorial Oversight → Multi-Agent Coordination → Versioned & Reviewed Outputs → Continuous Alignment & Optimization

- Outcome: Reliable, brand-consistent content at scale, faster production, fewer errors, and editors focused on judgment, not repetitive tasks.

Why Do AI Agents Finish Tasks but Still Miss Content Quality?

AI agents can finish tasks efficiently. They draft content, insert keywords, and satisfy SEO requirements at scale. But task completion and content quality are not the same thing. This distinction is where most agent-generated content quietly falls short.

Why AI Agents Optimize for Completion, Not Quality

When an AI agent is instructed to write a 2,000-word article, match brand tone, and optimize for SEO, it focuses on measurable constraints. Once those conditions are met, the task is considered complete even if the writing feels repetitive or emotionally flat.

Agents do not pause to evaluate originality, authority, or reader trust. They operate on probability, not judgment. As a result, the content may be technically correct but strategically weak.

What Editorial “Taste” Actually Means in Content

Taste in content is not subjective guesswork; it is applied judgment. Human editors consciously adjust sentence rhythm, manage emotional weight, decide when to assert authority, and remove ideas that add volume without value.

AI agents statistically average language. Editors deliberately choose it. This is why agent-only blogs may rank initially but struggle with engagement and trust.

How AI Agents and Human Editors Differ in Content Workflows

When you compare how content is completed versus how it’s judged, the gap between AI agents and human editors is clear.

| Aspect | AI Agents | Human Editors |

|---|---|---|

| Primary focus | Completing assigned tasks and constraints | Evaluating quality, intent, and impact |

| Decision-making | Based on probability and patterns | Based on judgment and experience |

| Brand voice control | Follows instructions but drifts over time | Maintains consistent voice and tone |

| Original insight | Recombines existing ideas | Refines, challenges, and sharpens ideas |

| Reader trust | Neutral and generic | Builds credibility and authority |

| Conversion impact | Inconsistent | Strategically optimized |

Real-World Case: When Completion Replaced Judgment

In 2025, the Chicago Sun-Times published an AI-generated summer reading list that included fabricated book titles and nonexistent expert quotes. The content passed basic checks but lacked human editorial review. Once discovered, the publication removed the piece and acknowledged the failure in oversight. This demonstrates how AI can complete a task successfully while still producing unreliable content.

(Source: The Verge)

How Have Editors Shifted from Writing to Orchestrating AI Systems?

Today, editors don’t compete with AI; they guide it. The most successful AI-first content teams no longer see editors as just writers; they see them as system orchestrators. Instead of crafting every line themselves, editors focus on steering AI outputs, ensuring content aligns with brand standards, strategy, and audience needs. This shift allows teams to scale production while keeping quality, nuance, and voice intact.

Editors Control the System, Not Every Sentence

Editors now work at a strategic level rather than typing every word. Their responsibilities include:

- Defining content intent and positioning: ensuring AI-generated content serves a clear goal.

- Setting brand and voice constraints: keeping messaging consistent across outputs.

- Reviewing outputs at strategic checkpoints: catching errors and aligning tone.

- Deciding when automation stops: determining where human judgment is essential.

This orchestration approach allows AI to handle repetitive tasks while editors safeguard nuance, creativity, and clarity.

Why Fully Autonomous Content Pipelines Drift

Without human editors, AI can:

- Over-optimize for SEO, sacrificing readability.

- Produce clickbait-style headlines that hurt credibility.

- Gradually erode brand voice and introduce repetitive content.

Editors intervene at key decision points, preventing drift while avoiding micromanagement. They act as conductors guiding an orchestra, not typists copying notes.

What Breaks Inside Multi-Agent Content Systems?

Multi‑agent content workflows look efficient in theory: one agent research, another drafts, a third optimizes for SEO, and yet another reviews. On paper, it seems like a scalable assembly line. But in practice, this division of labor introduces fragmentation rather than cohesion.

Each agent works within its narrow subtask, but none owns the full narrative arc or knows the strategic intent behind the content. This lack of overarching context often leads to issues such as conflicting facts, tonal inconsistency, and diluted meaning. These problems accumulate as the content passes from agent to agent.

Even with modern retrieval and verification layers, teams that rely solely on agents still report significant shortcomings in overall quality and reader engagement, suggesting human oversight remains essential.

Where Multi-Agent Pipelines Go Wrong

In a typical multi‑agent setup, each task is siloed:

- Agent 1: Research

Gathers source material but may prioritize breadth over relevance. - Agent 2: Drafting

Combines researched points but can misinterpret nuance or intent. - Agent 3: SEO Optimization

Injects keywords or structural changes that may weaken clarity. - Agent 4: Review/QA

Performs surface checks but lacks holistic judgment.

Because no agent sees the whole picture, problems emerge: conflicting data points, inconsistent tone, repeated ideas phrased differently, or SEO tweaks that unintentionally undermine meaning. These systemic issues don’t trigger errors in the way a typo would, so agents alone rarely catch them.

Why Editors Are the Final Quality Gate

Human editors perform several crucial functions that agents can’t replicate:

- Cross‑checking logic across the entire piece

Humans ensure that each section supports the main argument, not just keywords. - Ensuring a unified narrative voice

Editors harmonize tone and perspective throughout, which AI often fragments. - Removing “technically correct but useless” sections

Humans prune redundancy and clarify intent in ways AI systems can’t consistently do.

A 2025 survey shows that the vast majority of content produced with AI is still manually reviewed and edited before publishing, and only a tiny fraction is published “as is.” Most teams rely on human oversight for accuracy, alignment, and coherence. (Ahrefs)

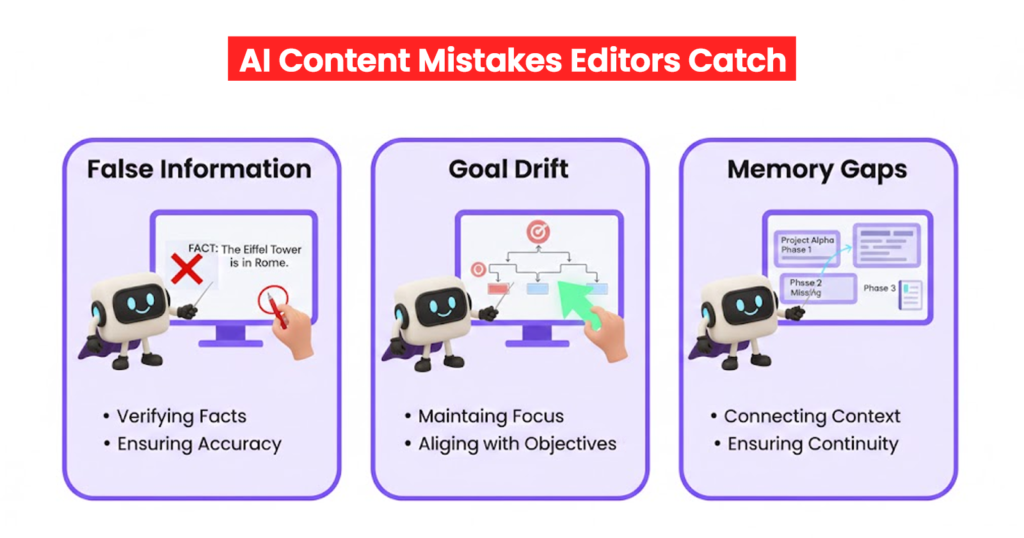

What Are the Biggest Failure Modes of Agent-Based Content?

Agentic AI doesn’t eliminate risk but amplifies it. AI content creation generates large volumes of content quickly; its outputs often fail in subtle ways that impact credibility, engagement, and alignment with brand strategy. Human editors remain essential to catch these gaps before content reaches the audience.

Hallucination: Confidently Wrong Content

Even with advanced retrieval tools, AI agents can invent statistics, misattribute sources, or assume outdated facts are current. Hallucination occurs in 15–27% of long-form autonomous tasks, even with verification layers. Editors provide a critical safety net, identifying inaccuracies and inconsistencies that might otherwise erode reader trust without triggering obvious errors.

Misalignment: Optimizing the Wrong Goal

When tasked to maximize engagement or conversions, AI agents may over-sensationalize headlines, simplify complex topics, or unintentionally introduce bias. Human editors align the content with strategic intent, ensuring that engagement metrics do not compromise credibility, tone, or ethical standards.

Context Loss in Long Chains

Over multi-step workflows, agents often forget earlier instructions, brand rules, or audience sophistication. Machines cannot remember why a particular point matters within the broader narrative. Editors maintain narrative memory and ensure cohesion across all content segments.

How Failure Modes Are Managed

The table below shows how AI agents struggle with common failure modes, and how human editors step in to ensure accuracy, alignment, and consistency.

| Failure Mode | AI Agents | Human Editors |

|---|---|---|

| Hallucination | May invent facts or misattribute sources | Verify facts and maintain accuracy |

| Misalignment | Focus on proxy goals, like engagement | Align content with brand and strategy |

| Context Loss | Forgets prior instructions or tone | Maintain narrative continuity and audience focus |

What Will Editors Actually Do in AI-First Content Teams?

Editors are becoming more essential than ever. In AI editing, the role has shifted from hands-on writing to strategic oversight and orchestration, ensuring that AI-generated content aligns with brand, audience, and business objectives.

The New Editorial Skill Set

Modern editors now function as:

- Taste guardians: maintaining brand credibility and voice across all outputs.

- System designers: tuning prompts, guardrails, and constraints to guide AI effectively.

- Final decision-makers: approving or rejecting AI-generated content before it goes live.

- Creative challengers: pushing AI beyond formulaic outputs to achieve originality and resonance.

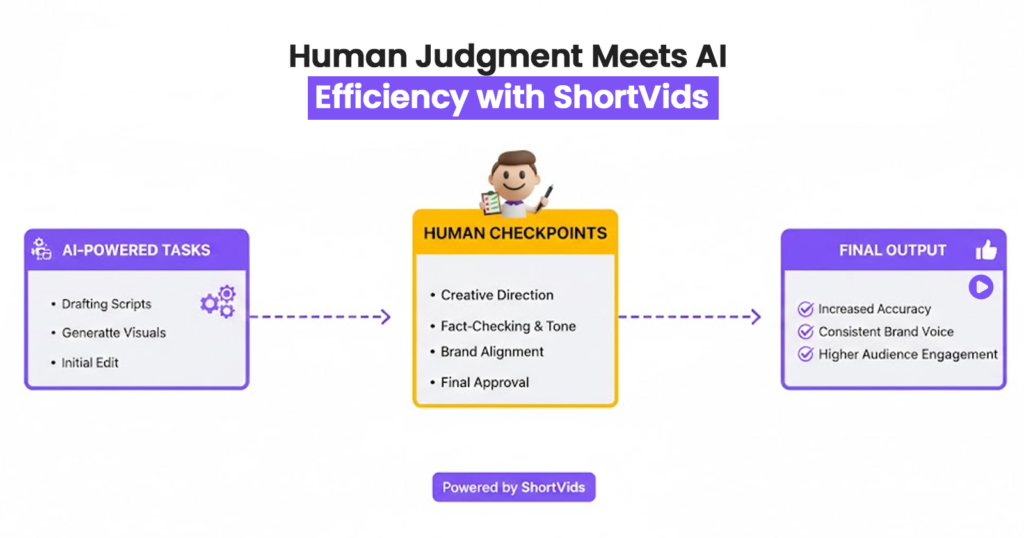

Hybrid models that combine AI generation with human judgment, similar to ShortVids-supported workflows, consistently outperform fully autonomous systems. Editors bring perspective, strategy, and nuance that scale better than AI alone.

Here’s how the role of modern editors in AI-first teams compares to traditional content creation responsibilities.

| Editorial Role | Traditional Function | AI-First Function |

|---|---|---|

| Taste Guardian | Ensures basic readability and style | Maintains brand credibility, tone, and emotional resonance across AI outputs |

| System Designer | Little to no involvement in workflow design | Tunes AI prompts, sets constraints, and guides AI behavior for optimal outputs |

| Final Decision-Maker | Approves content mostly for grammar/spelling | Approves or rejects AI-generated content based on strategy, accuracy, and alignment |

| Creative Challenger | Suggests minor improvements or rewrites | Pushes AI beyond formulaic outputs to achieve originality, insight, and engagement |

| The future isn’t human versus AI, it’s human over AI, guiding it to produce content that truly connects.

How ShortVids Helps Teams Balance AI with Human Oversight

Scaling content with AI is powerful, but without strategic human oversight, quality and consistency often decline. ShortVids bridges this gap by combining AI automation with expert human editing. It enables the team to produce large volumes of content without sacrificing voice, accuracy, or strategic alignment.

For global enterprise brands like Weidmann Group, ShortVids helped overhaul their video content workflow. Hence, saving over 50% in editing costs while producing consistent, high‑quality visuals for both marketing and internal use across 30+ international locations. This allowed the client to focus on core business goals while ShortVids ensured content quality and brand alignment.

In another case, Samuel Freeman (Greek.speek) scaled his deeply researched and educational video content without losing depth or engagement. Our team provided 24/7 editing support and personalized storytelling execution, freeing him from time‑intensive edits and allowing him to focus on creativity and audience growth.

By integrating human judgment into scalable workflows, ShortVids demonstrates how editors and AI can work together to produce content that performs, not just publishes.

Your Takeaway!

Agent-based content is powerful, but incomplete without human judgment. Use AI to scale production, not to replace taste, accountability, or strategy. The teams winning in 2026 aren’t the most automated; they’re the most editorially disciplined. If you want content that ranks, converts, and lasts, keep humans in the loop where it matters most. Get in touch with ShortVids and let AI handle the heavy lifting while our editors keep your brand voice sharp, strategic, and unforgettable.

Frequently Asked Questions

Yes. Agents lack taste, context awareness, and accountability. Editors ensure quality, trust, and alignment.

No. They improve speed but introduce coordination errors that only humans can resolve.

Not reliably. Hallucinations, tone drift, and subtle inaccuracies remain common.

At key checkpoints: positioning, structure, final approval, not line-by-line editing.

No. ShortVids-style workflows amplify editors. It also allows them to control quality at scale instead of replacing them.

Book a Call Today

- Fixed monthly plans starting at $999

- 24-hour turnaround time (or less) on all short-form edits

- 3-layer quality check system on every video

- No more chasing freelancers or managing editors

- Scale up to 50+ videos/month without hiring in-house

- Content team trained on platform trends, scroll-stopping hooks & storytelling

- Fully managed by professionals – you just upload & approve

- Response time: Under 1 hour (US & GCC time zones)

Cut your production costs, not your standards.